Publications

publications by categories in reversed chronological order.

An up-to-date list is available on Google Scholar .

2024

-

RAPiD-Seg: Range-Aware Pointwise Distance Distribution Networks for 3D LiDAR SegmentationLi Li, Hubert P. H. Shum, and Toby P. BreckonIn European Conference on Computer Vision (ECCV), 2024🏆 Oral Presentation (2.3% = 200/8585)

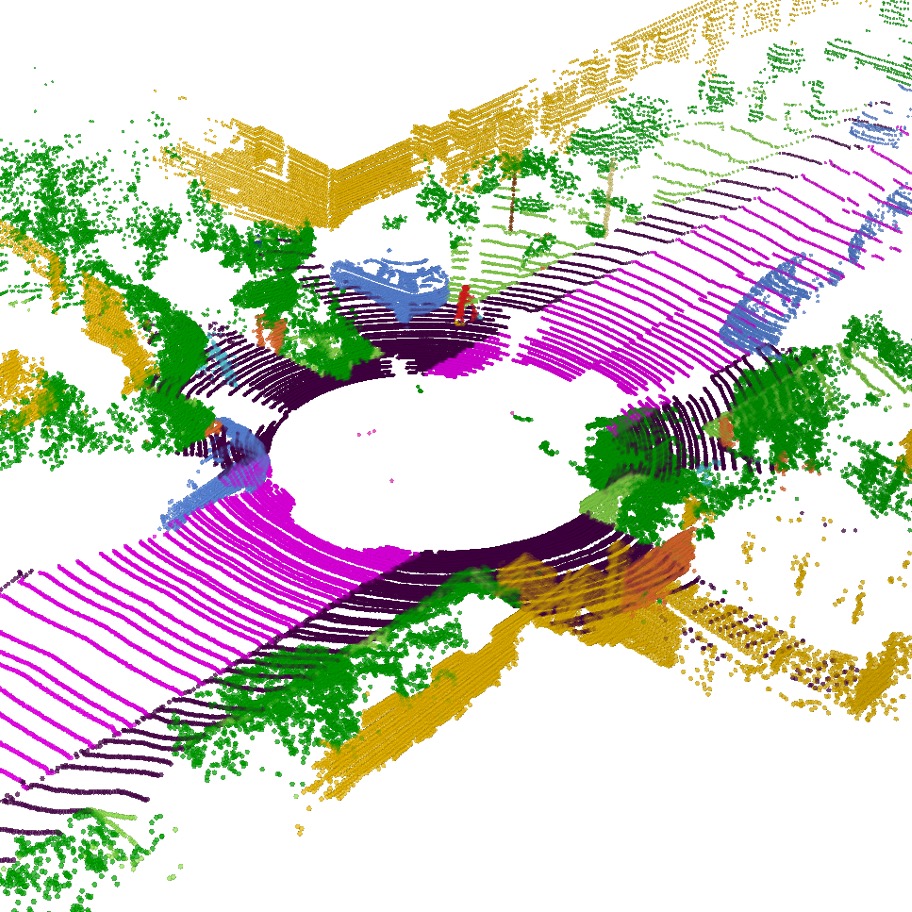

RAPiD-Seg: Range-Aware Pointwise Distance Distribution Networks for 3D LiDAR SegmentationLi Li, Hubert P. H. Shum, and Toby P. BreckonIn European Conference on Computer Vision (ECCV), 2024🏆 Oral Presentation (2.3% = 200/8585)3D point clouds play a pivotal role in outdoor scene perception, especially in the context of autonomous driving. Recent advancements in 3D LiDAR segmentation often focus intensely on the spatial positioning and distribution of points for accurate segmentation. However, these methods, while robust in variable conditions, encounter challenges due to sole reliance on coordinates and point intensity, leading to poor isometric invariance and suboptimal segmentation. To tackle this challenge, our work introduces Range-Aware Pointwise Distance Distribution (RAPiD) features and the associated RAPiD-Seg architecture. Our RAPiD features exhibit rigid transformation invariance and effectively adapt to variations in point density, with a design focus on capturing the localized geometry of neighboring structures. They utilize inherent LiDAR isotropic radiation and semantic categorization for enhanced local representation and computational efficiency, while incorporating a 4D distance metric that integrates geometric and surface material reflectivity for improved semantic segmentation. To effectively embed high-dimensional RAPiD features, we propose a double-nested autoencoder structure with a novel class-aware embedding objective to encode high-dimensional features into manageable voxel-wise embeddings. Additionally, we propose RAPiD-Seg which incorporates a channel-wise attention fusion and two effective RAPiD-Seg variants, further optimizing the embedding for enhanced performance and generalization. Our method outperforms contemporary LiDAR segmentation work in terms of mIoU on SemanticKITTI (76.1) and nuScenes (83.6) datasets.

@inproceedings{li2024rapidseg, title = {{{RAPiD-Seg}}: {{Range-Aware}} {{Pointwise Distance Distribution}} {{Networks}} for {{3D LiDAR Segmentation}}}, gh_repo = {rapid_seg}, author = {Li, Li and Shum, Hubert P. H. and Breckon, Toby P.}, keywords = {point cloud, semantic segmentation, invariance feature, pointwise distance distribution, autonomous driving}, year = {2024}, month = jul, publisher = {{Springer}}, booktitle = {European Conference on Computer Vision (ECCV)}, note = {🏆 <strong style="color:#CC0000;">Oral Presentation</strong> (2.3% = 200/8585)} } -

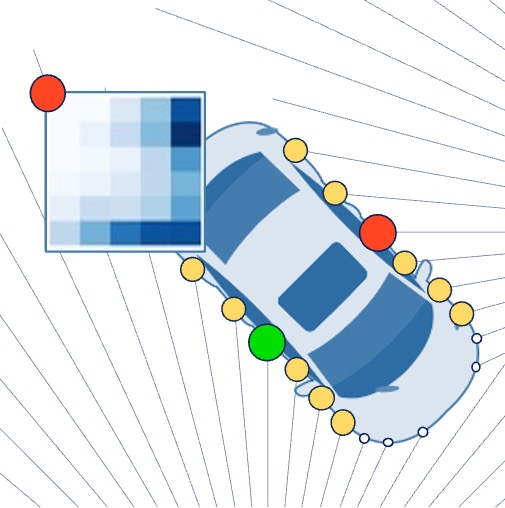

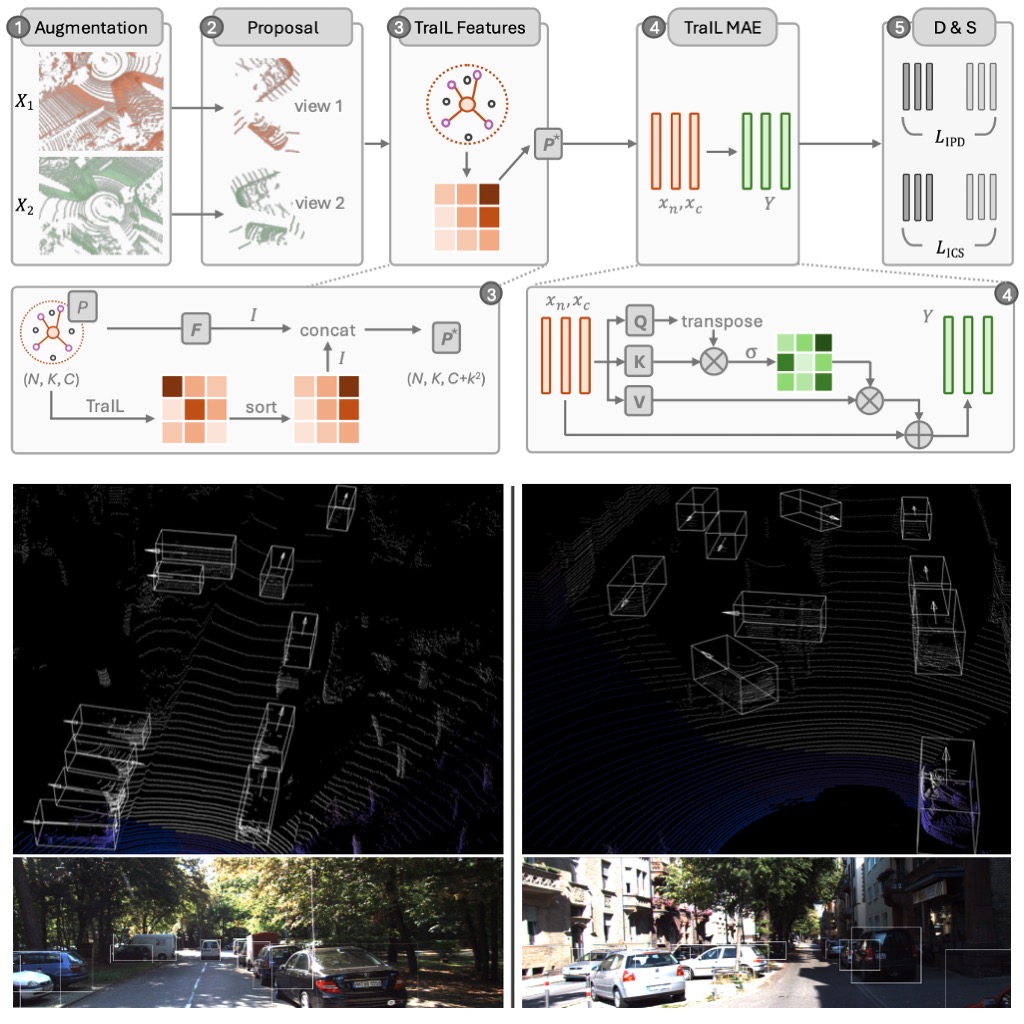

TraIL-Det: Transformation-Invariant Local Feature Networks for 3D LiDAR Object Detection with Unsupervised Pre-TrainingLi Li, Tanqiu Qiao, Hubert P. H. Shum, and Toby P. BreckonIn British Machine Vision Conference (BMVC), 2024

TraIL-Det: Transformation-Invariant Local Feature Networks for 3D LiDAR Object Detection with Unsupervised Pre-TrainingLi Li, Tanqiu Qiao, Hubert P. H. Shum, and Toby P. BreckonIn British Machine Vision Conference (BMVC), 20243D point clouds are essential for perceiving outdoor scenes, especially within the realm of autonomous driving. Recent advances in 3D LiDAR Object Detection focus primarily on the spatial positioning and distribution of points to ensure accurate detection. However, despite their robust performance in variable conditions, these methods are hindered by their sole reliance on coordinates and point intensity, resulting in inadequate isometric invariance and suboptimal detection outcomes. To tackle this challenge, our work introduces Transformation-Invariant Local (TraIL) features and the associated TraIL-Det architecture. Our TraIL features exhibit rigid transformation invariance and effectively adapt to variations in point density, with a design focus on capturing the localized geometry of neighboring structures. They utilize the inherent isotropic radiation of LiDAR to enhance local representation, improve computational efficiency, and boost detection performance. To effectively process the geometric relations among points within each proposal, we propose a Multi-head self-Attention Encoder (MAE) with asymmetric geometric features to encode high-dimensional TraIL features into manageable representations. Our method outperforms contemporary self-supervised 3D object detection approaches in terms of mAP on KITTI (67.8, 20% label, moderate) and Waymo (68.9, 20% label, moderate) datasets under various label ratios (20%, 50%, and 100%).

@inproceedings{li24trail, gh_repo = {rapid_seg}, author = {Li, Li and Qiao, Tanqiu and Shum, Hubert P. H. and Breckon, Toby P.}, title = {{TraIL-Det: Transformation-Invariant Local Feature Networks for 3D LiDAR Object Detection with Unsupervised Pre-Training}}, booktitle = {British Machine Vision Conference (BMVC)}, year = {2024}, month = jul, publisher = {BMVA}, keywords = {autonomous driving, LiDAR, 3D, point cloud, object detection, invariance feature}, category = {automotive 3Dvision}, } -

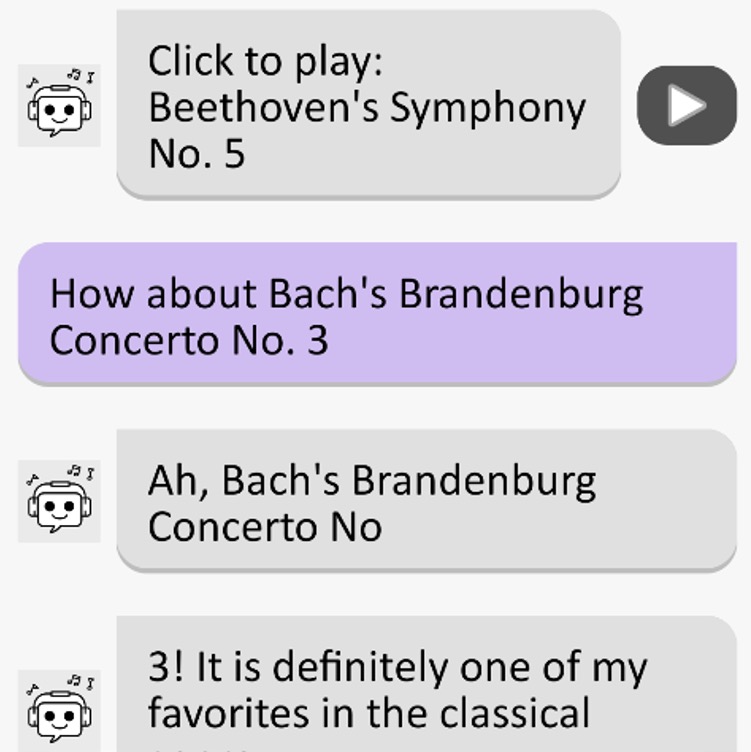

Inclusive AI-driven Music Chatbots for Older AdultsFarkhandah Aziz, Effie Law, Li Li, and Shuang ChenIn Engineering Interactive Systems Embedding AI Technologies, 2024

Inclusive AI-driven Music Chatbots for Older AdultsFarkhandah Aziz, Effie Law, Li Li, and Shuang ChenIn Engineering Interactive Systems Embedding AI Technologies, 2024Today, chatbots are commonly used for music, mainly targeting young people. Yet, there is little research on adapting them for older adults. To address this, we conducted a survey with 20 older adults to understand their music preferences and needs for a chatbot. After developing a prototype based on user requirements identified, we tested it with five older adults, who generally had positive feedback. Moving forward, we aim to explore ways to make the music chatbot more inclusive.

@inproceedings{aziz2024inclusive, gh_repo = {ca4oa-music-chatbot}, title = {Inclusive {AI}-driven {Music} {Chatbots} for {Older} {Adults}}, booktitle = {Engineering Interactive Systems Embedding AI Technologies}, author = {Aziz, Farkhandah and Law, Effie and Li, Li and Chen, Shuang}, publisher = {ACM}, year = {2024}, month = may, } -

On Deep Learning for Geometric and Semantic Scene Understanding Using On-Vehicle 3D LiDARLi LiDurham University, 2024🏆 The 2024 Sullivan Doctoral Thesis Prize runner-up

On Deep Learning for Geometric and Semantic Scene Understanding Using On-Vehicle 3D LiDARLi LiDurham University, 2024🏆 The 2024 Sullivan Doctoral Thesis Prize runner-up3D LiDAR point cloud data is crucial for scene perception in computer vision, robotics, and autonomous driving. Geometric and semantic scene understanding, involving 3D point clouds, is essential for advancing autonomous driving technologies. However, significant challenges remain, particularly in improving the overall accuracy (e.g., segmentation accuracy, depth estimation accuracy, etc.) and efficiency of these systems. To address the challenge in terms of accuracy related to LiDAR-based tasks, we present DurLAR, the first high-fidelity 128-channel 3D LiDAR dataset featuring panoramic ambient (near infrared) and reflectivity imagery. Leveraging DurLAR, which exceeds the resolution of prior benchmarks, we tackle the task of monocular depth estimation. Utilizing this high-resolution yet sparse ground truth scene depth information, we propose a novel joint supervised/self-supervised loss formulation, significantly enhancing depth estimation accuracy. To improve efficiency in 3D segmentation while ensuring the accuracy, we propose a novel pipeline that employs a smaller architecture, requiring fewer ground-truth annotations while achieving superior segmentation accuracy compared to contemporary approaches. This is facilitated by a novel Sparse Depthwise Separable Convolution (SDSC) module, which significantly reduces the network parameter count while retaining overall task performance. Additionally, we introduce a new Spatio-Temporal Redundant Frame Downsampling (ST-RFD) method that uses sensor motion knowledge to extract a diverse subset of training data frame samples, thereby enhancing computational efficiency. Furthermore, recent advancements in 3D LiDAR segmentation focus on spatial positioning and distribution of points to improve the segmentation accuracy. The dependencies on coordinates and point intensity result in suboptimal performance and poor isometric invariance. To improve the segmentation accuracy, we introduce Range-Aware Pointwise Distance Distribution (RAPiD) features and the associated RAPiD-Seg architecture. These features demonstrate rigid transformation invariance and adapt to point density variations, focusing on the localized geometry of neighboring structures. Utilizing LiDAR isotropic radiation and semantic categorization, they enhance local representation and computational efficiency. We validate the effectiveness of our methods through extensive experiments and qualitative analysis. Our approaches surpass the state-of-the-art (SoTA) research in mIoU (for semantic segmentation) and RMSE (for depth estimation). All contributions have been accepted by peer-reviewed conferences, underscoring the advancements in both accuracy and efficiency in 3D LiDAR applications for autonomous driving.

@phdthesis{li2024deep, eprint = {2411.00600}, title = {On {{Deep Learning}} for {{Geometric}} and {{Semantic Scene Understanding Using On-Vehicle 3D LiDAR}}}, author = {Li, Li}, year = {2024}, month = jul, school = {Durham University}, type = {phdthesis}, } -

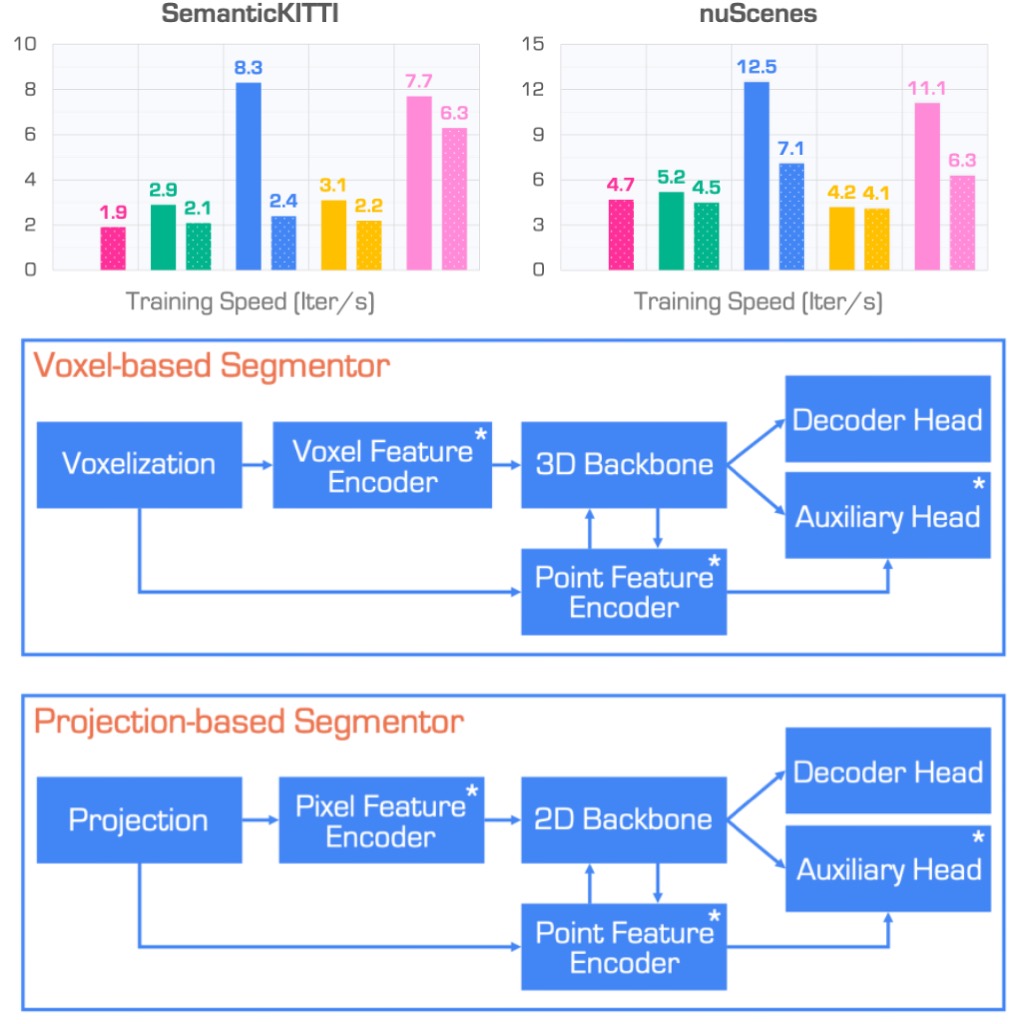

An Empirical Study of Training State-of-the-Art LiDAR Segmentation ModelsJiahao Sun, Xiang Xu, Lingdong Kong, Youquan Liu, Li Li, and 8 more authors2024

An Empirical Study of Training State-of-the-Art LiDAR Segmentation ModelsJiahao Sun, Xiang Xu, Lingdong Kong, Youquan Liu, Li Li, and 8 more authors2024In the rapidly evolving field of autonomous driving, precise segmentation of LiDAR data is crucial for understanding complex 3D environments. Traditional approaches often rely on disparate, standalone codebases, hindering unified advancements and fair benchmarking across models. To address these challenges, we introduce MMDetection3D-lidarseg, a comprehensive toolbox designed for the efficient training and evaluation of state-of-the-art LiDAR segmentation models. We support a wide range of segmentation models and integrate advanced data augmentation techniques to enhance robustness and generalization. Additionally, the toolbox provides support for multiple leading sparse convolution backends, optimizing computational efficiency and performance. By fostering a unified framework, MMDetection3D-lidarseg streamlines development and benchmarking, setting new standards for research and application. Our extensive benchmark experiments on widely-used datasets demonstrate the effectiveness of the toolbox. The codebase and trained models have been publicly available, promoting further research and innovation in the field of LiDAR segmentation for autonomous driving.

@misc{sun2024empirical, title = {An {{Empirical Study}} of {{Training State-of-the-Art LiDAR Segmentation Models}}}, gh_repo = {open-mmlab/mmdetection3d}, author = {Sun, Jiahao and Xu, Xiang and Kong, Lingdong and Liu, Youquan and Li, Li and Zhu, Chenming and Zhang, Jingwei and Xiao, Zeqi and Chen, Runnan and Wang, Tai and Zhang, Wenwei and Chen, Kai and Qing, Chunmei}, year = {2024}, number = {arXiv:2405.14870}, eprint = {2405.14870}, primaryclass = {cs}, publisher = {arXiv}, doi = {10.48550/arXiv.2405.14870}, urldate = {2024-05-25}, }

2023

-

Less Is More: Reducing Task and Model Complexity for 3D Point Cloud Semantic SegmentationLi Li, Hubert P. H. Shum, and Toby P. BreckonIn Conference on Computer Vision and Pattern Recognition (CVPR), 2023

Less Is More: Reducing Task and Model Complexity for 3D Point Cloud Semantic SegmentationLi Li, Hubert P. H. Shum, and Toby P. BreckonIn Conference on Computer Vision and Pattern Recognition (CVPR), 2023Whilst the availability of 3D LiDAR point cloud data has significantly grown in recent years, annotation remains expensive and time-consuming, leading to a demand for semi-supervised semantic segmentation methods with application domains such as autonomous driving. Existing work very often employs relatively large segmentation backbone networks to improve segmentation accuracy, at the expense of computational costs. In addition, many use uniform sampling to reduce ground truth data requirements for learning needed, often resulting in sub-optimal performance. To address these issues, we propose a new pipeline that employs a smaller architecture, requiring fewer ground-truth annotations to achieve superior segmentation accuracy compared to contemporary approaches. This is facilitated via a novel Sparse Depthwise Separable Convolution module that significantly reduces the network parameter count while retaining overall task performance. To effectively sub-sample our training data, we propose a new Spatio-Temporal Redundant Frame Downsampling (ST-RFD) method that leverages knowledge of sensor motion within the environment to extract a more diverse subset of training data frame samples. To leverage the use of limited annotated data samples, we further propose a soft pseudo-label method informed by LiDAR reflectivity. Our method outperforms contemporary semi-supervised work in terms of mIoU, using less labeled data, on the SemanticKITTI (59.5@5%) and ScribbleKITTI (58.1@5%) benchmark datasets, based on a 2.3× reduction in model parameters and 641x fewer multiply-add operations whilst also demonstrating significant performance improvement on limited training data (i.e., Less is More).

@inproceedings{li2023less, title = {{{Less Is More}}: {{Reducing Task}} and {{Model Complexity}} for {{3D Point Cloud Semantic Segmentation}}}, gh_repo = {lim3d}, author = {Li, Li and Shum, Hubert P. H. and Breckon, Toby P.}, keywords = {point cloud, semantic segmentation, sparse convolution, depthwise separable convolution, autonomous driving}, year = {2023}, month = jun, publisher = {{IEEE}}, booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)}, }

2021

-

DurLAR: A High-Fidelity 128-Channel LiDAR Dataset with Panoramic Ambient and Reflectivity Imagery for Multi-Modal Autonomous Driving ApplicationsLi Li, K.N. Ismail, Hubert P. H. Shum, and Toby P. BreckonIn International Conference on 3D Vision (3DV), 2021

DurLAR: A High-Fidelity 128-Channel LiDAR Dataset with Panoramic Ambient and Reflectivity Imagery for Multi-Modal Autonomous Driving ApplicationsLi Li, K.N. Ismail, Hubert P. H. Shum, and Toby P. BreckonIn International Conference on 3D Vision (3DV), 2021We present DurLAR, a high-fidelity 128-channel 3D LiDAR dataset with panoramic ambient (near infrared) andreflectivity imagery, as well as a sample benchmark usingdepth estimation for autonomous driving applications. Ourdriving platform is equipped with a high resolution 128channel LiDAR, a 2MPix stereo camera, a lux meter anda GNSS/INS system. Ambient and reflectivity images aremade available along with the LiDAR point clouds to facilitate multi-modal use of concurrent ambient and reflectivityscene information. Leveraging DurLAR, with a resolutionexceeding that of prior benchmarks, we consider the task ofmonocular depth estimation and use this increased availability of higher resolution, yet sparse ground truth scenedepth information to propose a novel joint supervised/self-supervised loss formulation. We compare performance overboth our new DurLAR dataset, the established KITTI benchmark and the Cityscapes dataset. Our evaluation shows ourjoint use supervised and self-supervised loss terms, enabledvia the superior ground truth resolution and availabilitywithin DurLAR improves the quantitative and qualitativeperformance of leading contemporary monocular depth es-timation approaches (RMSE= 3.639,SqRel= 0.936).

@inproceedings{li21durlar, gh_repo = {durlar}, author = {Li, Li and Ismail, K.N. and Shum, Hubert P. H. and Breckon, Toby P.}, title = {{{DurLAR}}: A {{High-Fidelity}} {{128-Channel}} {{LiDAR Dataset}} with {{Panoramic Ambient and Reflectivity Imagery}} for {{Multi-Modal}} {{Autonomous Driving Applications}}}, booktitle = {International Conference on 3D Vision (3DV)}, year = {2021}, month = dec, publisher = {IEEE}, keywords = {autonomous driving, dataset, high resolution LiDAR, flash LiDAR, ground truth depth, dense depth, monocular depth estimation, stereo vision, 3D}, category = {automotive 3Dvision}, }